Collect all Logs From a Docker Swarm Cluster

This post is rather a quick cliff notes of Victor Farcic post where he forwards all logs from all containers running inside a Docker Swarm Cluster.

Logspout is the magic that makes this possible.

Logspout is a log router for Docker containers that runs inside Docker. It attaches to all containers on a host, then routes their logs wherever you want. It also has an extensible module system.

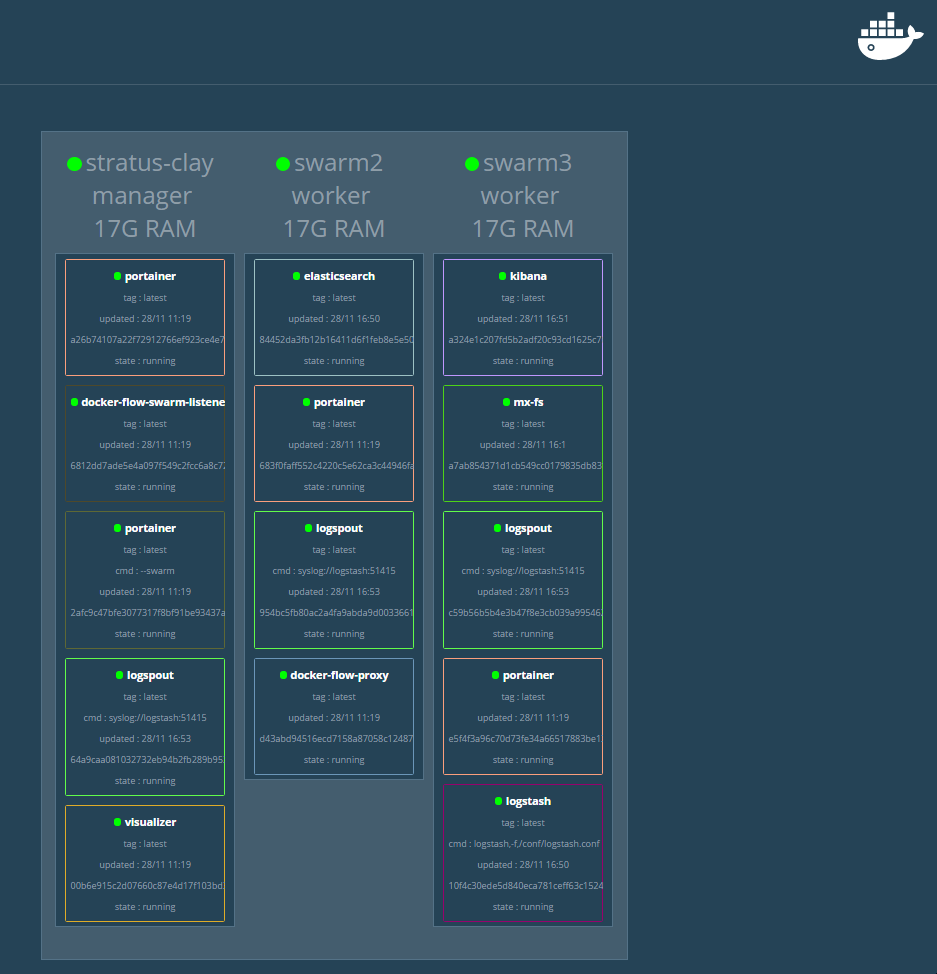

Admin/Viz - if you need to look into what is happening

Let's start by installing Portainer and Docker Swarm Visualizer to be able to follow along on what is happening on our cluster. To do that:

docker service create \

--publish 9008:9000 \

--limit-cpu 0.5 \

--name portainer-swarm \

--constraint=node.role==manager \

--mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock \

portainer/portainer --swarm

docker service create \

--publish 9009:9000 \

--limit-cpu 0.5 \

--name portainer \

--mode global \

--mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock \

portainer/portainer

docker service create \

--publish=9090:8080 \

--limit-cpu 0.5 \

--name=viz \

--env PORT=9090 \

--constraint=node.role==manager \

--mount=type=bind,src=/var/run/docker.sock,dst=/var/run/docker.sock \

manomarks/visualizer

Next Open - http://stratus-clay:9090 and http://stratus-clay:9008, and http://stratus-clay:9009 (and on each of the swarm nodes if needed)

Utility function to wait for a service... (used below for demo purposes)

function wait_for_service()

if [ $# -ne 1 ]

then

echo usage $FUNCNAME "service";

echo e.g: $FUNCNAME docker-proxy

else

serviceName=$1

while true; do

REPLICAS=$(docker service ls | grep -E "(^| )$serviceName( |$)" | awk '{print $3}')

if [[ $REPLICAS == "1/1" ]]; then

break

else

echo "Waiting for the $serviceName service... ($REPLICAS)"

sleep 5

fi

done

fi

Alternatively you can run watch in another shell and visually check that the service is ready

watch -d -n 2 docker service ls

Create Networks

As you should already know from the previous post, we will create two networks to isolate our application from the outside.

docker network create --driver overlay proxy

docker network create --driver overlay elk

Create Elastic Search Service

docker service create \

--name elasticsearch \

--network elk \

-p 9200:9200 \

--reserve-memory 500m \

elasticsearch:2.4

Or Run the latest

sysctl -w vm.max_map_count=262144

docker service create --name elasticsearch \

--network elk \

-p 9200:9200 \

--reserve-memory 800m \

elasticsearch:latest

wait_for_service elasticsearch

Create Logstash Service

RUN THIS ON ALL NODES!!!!!

mkdir -p /data/apps/home/jmkhael/elk-playground/docker/logstash

pushd /data/apps/home/jmkhael/elk-playground/docker/logstash

wget https://raw.githubusercontent.com/vfarcic/cloud-provisioning/master/conf/logstash.conf

popd

Then really Create Logstash Service

docker service create --name logstash \

--mount "type=bind,source=/data/apps/home/jmkhael/elk-playground/docker/logstash,target=/conf" \

--network elk -e LOGSPOUT=ignore --reserve-memory 100m logstash:2.4 logstash -f /conf/logstash.conf

wait_for_service logstash

Run latest

docker service create --name logstash \

--mount "type=bind,source=/data/apps/home/jmkhael/elk-playground/docker/logstash,target=/conf" \

--network elk \

-e LOGSPOUT=ignore \

--reserve-memory 100m \

logstash:latest logstash -f /conf/logstash.conf

wait_for_service logstash

Create Docker Listener

docker service create \

--name swarm-listener --network proxy \

--mount "type=bind,source=/var/run/docker.sock,target=/var/run/docker.sock" \

-e DF_NOTIF_CREATE_SERVICE_URL=http://docker-proxy:8080/v1/docker-flow-proxy/reconfigure \

-e DF_NOTIF_REMOVE_SERVICE_URL=http://docker-proxy:8080/v1/docker-flow-proxy/remove \

--constraint 'node.role==manager' vfarcic/docker-flow-swarm-listener

wait_for_service swarm-listener

Create Kibana Service

Kibana will be the only service we are going to expose on the HAProxy. Several services path needs to be exposed.

docker service create --name kibana \

--network elk \

--network proxy \

-e ELASTICSEARCH_URL=http://elasticsearch:9200 \

--reserve-memory 50m \

--label com.df.notify=true \

--label com.df.distribute=true \

--label com.df.servicePath=/app/kibana,/bundles,/elasticsearch \

--label com.df.port=5601 \

kibana:4.6

wait_for_service kibana

Run latest

docker service create --name kibana \

--network elk \

--network proxy \

-e ELASTICSEARCH_URL=http://elasticsearch:9200 \

--reserve-memory 50m \

--label com.df.notify=true \

--label com.df.distribute=true \

--label com.df.servicePath=/app/kibana,/bundles,/elasticsearch,/api,/plugins,/app/timelion \

--label com.df.port=5601 \

kibana:latest

wait_for_service kibana

Create Logspout Service

Logspout is the magic that makes this possible. We will use it to route all logs to the logstash service we defined.

docker service create --name logspout \

--network elk \

--mode global \

--mount "type=bind,source=/var/run/docker.sock,target=/var/run/docker.sock" \

-e SYSLOG_FORMAT=rfc3164 \

gliderlabs/logspout syslog://logstash:51415

wait_for_service logspout

Create HAProxy with automatic reconfiguration

docker service create --name docker-proxy -p 80:80 -p 443:443 -p 8080:8080 --network proxy -e SERVICE_NAME=docker-proxy -e MODE=swarm -e LISTENER_ADDRESS=swarm-listener vfarcic/docker-flow-proxy

wait_for_service docker-proxy

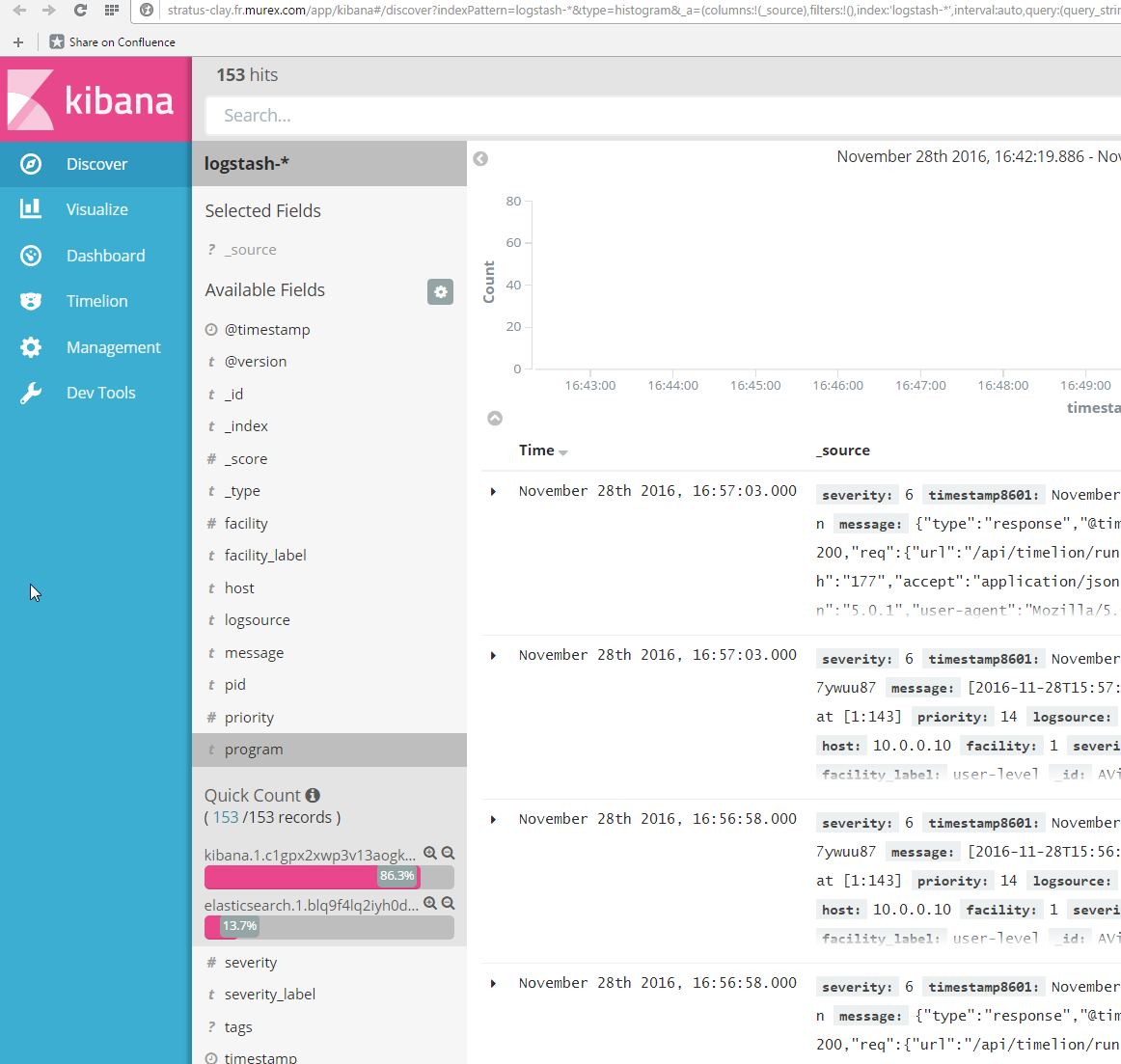

Test

Open Kibana and profit!

Open http://stratus-clay/app/kibana

check HAProxy config

curl http://`hostname`:8080/v1/docker-flow-proxy/config

Cleanup Services and networks

docker service rm swarm-listener docker-proxy portainer portainer-swarm viz hello-svc elasticsearch logstash kibana

docker network rm proxy elk

Debug - Check docker Deamon logs

sudo journalctl -fu docker.service