Microservice heaven with Docker

In the previous post we built and published our first image for the world to see.

Sadly, it doesn't do anything of importance besides being based on an Ubuntu image.

In this post we will make it more useful. (A bit more)

Basically, we will:

- Write code of our microservice

- Add our service to a Docker image, build and test it

- Publish our microservice image to the Docker Hub (Or an internal registry because, well...)

- Touch on the subject (we will discuss in later posts) which is scaling our service to hold more requests per seconds

Enough talk. Let's go.

Docker my Service

So, let's say our service is a simple Hello world type of service for simplicity.

In any way, as domain experts, we want to first define what contracts we have to honor for the users of our service.

Define our service contract

Basically, it will respond with a greeting.

In order to know that it is running on a given host, we will make the greeting look like Hello, Docker world from <hostname>.

So, after 2 iterations of development (maximum time to write or rewrite a microservice) we get our microservice as per the below:

./app.js

"use strict"

const http = require('http');

const os = require('os');

let hostname = os.hostname();

http.createServer((req, res) => {

res.end('Hello, Docker world from ' + hostname + '.\n');

}).listen(5000);

Design the Docker image

Our Dockerfile's image will look something like:

FROM <base>

MAINTAINER <maintainer>

ADD ./app.js /app.js

CMD ["command", "parameters"]

EXPOSE <port>

with:

- base = ideally an image which is able to run node. We can do a

docker search nodeto get a possible list. I'll pick a minimal one. base = mhart/alpine-node:latest - maintainer = this would be us :)

- CMD: command = command to launch our application. In this stack

command = node,parameters = /app.jsin another stack it could bejava -jaror./myservice... - port = the port we want to expose, in our application we need the port 5000 to be exposed. so

port = 5000(Note, this does not automatically expose the container port on the host, an operator would still need to do something)

With the above remarks integrated, our Dockerfile becomes:

FROM mhart/alpine-node:latest

MAINTAINER Stratus Clay "calclayer@murex.com"

ADD ./app.js /app.js

CMD ["node", "./app.js"]

EXPOSE 5000

You can find all the code under the stash repository docker-playground

Build the image

As in the previous post, let's build the image and give it many tags, because we can :)

docker build -t myservice:0.0.1 -t myservice:latest -t jmkhael/myservice:0.0.1 .

Test the image

As any good developer, we will of course also test it:

docker run -p 5000:5000 jmkhael/myservice:0.0.1

Notice the -p flag. It instruct docker to publish a container's port(s) to the host.

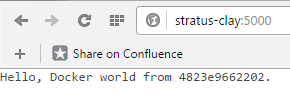

Now it is time to open a browser, and navigate to your http://

Curl is another way to do the same from the command line:

curl http://jmkhael.io:5000

and we get the expected response:

Hello, Docker world from 4823e9662202.

Neat!

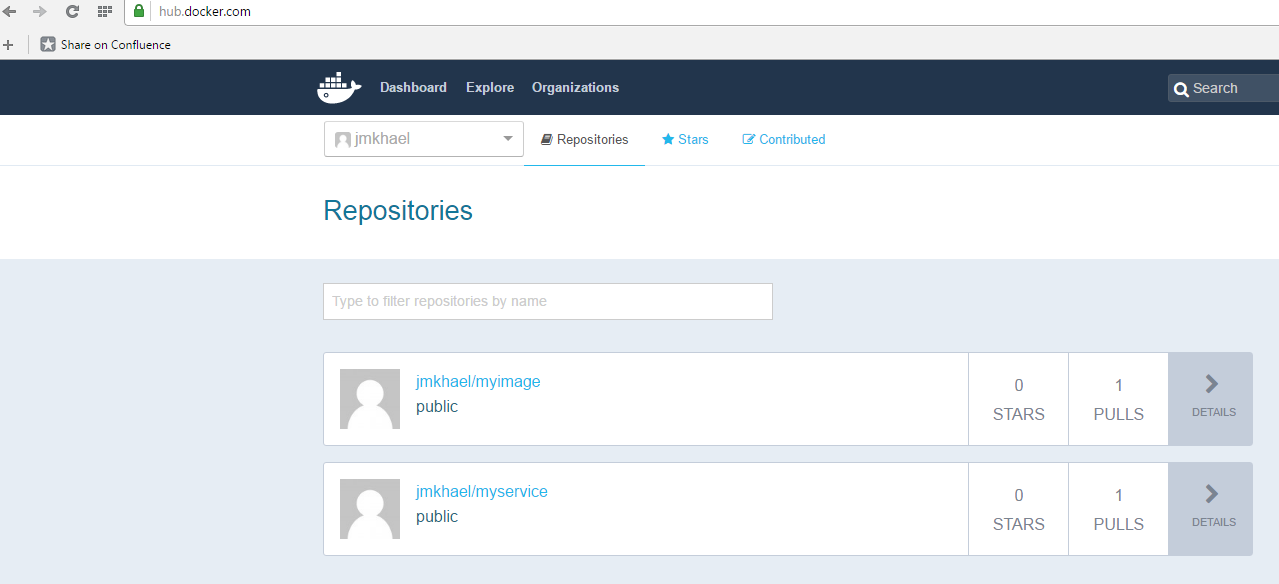

Publishing the image

As you know now, we can make the image public by pushing it:

docker push jmkhael/myservice:0.0.1

Will my service scale?

What if our service becomes very popular? What if every human wants to get greeted by it? Will it hold? How can we scale it?

Let's use ApacheBench to get an idea of the #req/sec we can absorb using this service:

ab -c 4 -n 10000 http://jmkhael.io:6000/

Concurrency Level: 4

Time taken for tests: 1.533 seconds

Complete requests: 10000

Total transferred: 1140000 bytes

HTML transferred: 390000 bytes

Requests per second: 6521.55 [#/sec] (mean)

Time per request: 0.613 [ms] (mean)

Time per request: 0.153 [ms] (mean, across all concurrent requests)

Transfer rate: 726.03 [Kbytes/sec] received

6521.55 #/sec. That's not bad!

As we saw in the previous section, we can of course run several instances of our service, on different ports. and hence theoretically handle more load! Scale it horizontally as we say.

docker run -p 5000:5000 jmkhael/myservice:0.0.1

docker run -p 6000:5000 jmkhael/myservice:0.0.1

docker run -p 7000:5000 jmkhael/myservice:0.0.1

...

But that will imply that the client of our service (browser, curl, another service...) has to cycle between these ports, and thus know our cluster topology. Darn.

How would I benchmark it now? :(

How do I even test it? Of course, an inclined bash artist would shout:

for i in {5000,6000,7000}; do curl http://localhost:$i/ ; done

Hello, Docker world from dcd318148abb.

Hello, Docker world from 2bba03f4c2be.

Hello, Docker world from b582e5c53a53.

But, that doesn't seem like fun, and already looks like it will complicate many aspects like:

- fault tolerance (what happens if a given container crash?)

- discovery and elasticity

- security ...

Up next

In this post,

- we created our first service,

- baked it into an image,

- deployed that image several time and

- even benchmarked it and tried to scale it a bit

But that raised up questions like:

How to find all these running instances without knowing their ports? Better, how to load balance/reverse proxy them using a single URL? What to do if a container crash? How to scale our service then? How to ...?

So many questions. We will try to answer some of them in the next post. (and hopefully raise new questions)