Scaling my microservice

In the previous post we built and published our first microservice for the world to see! Congratulate yourself!

When we started thinking about how to scale that thing, we faced several questions. One of them about how to load balance them, how to handle discovery, high availability, how to handle rolling upgrades...

Let's get some questions answered!

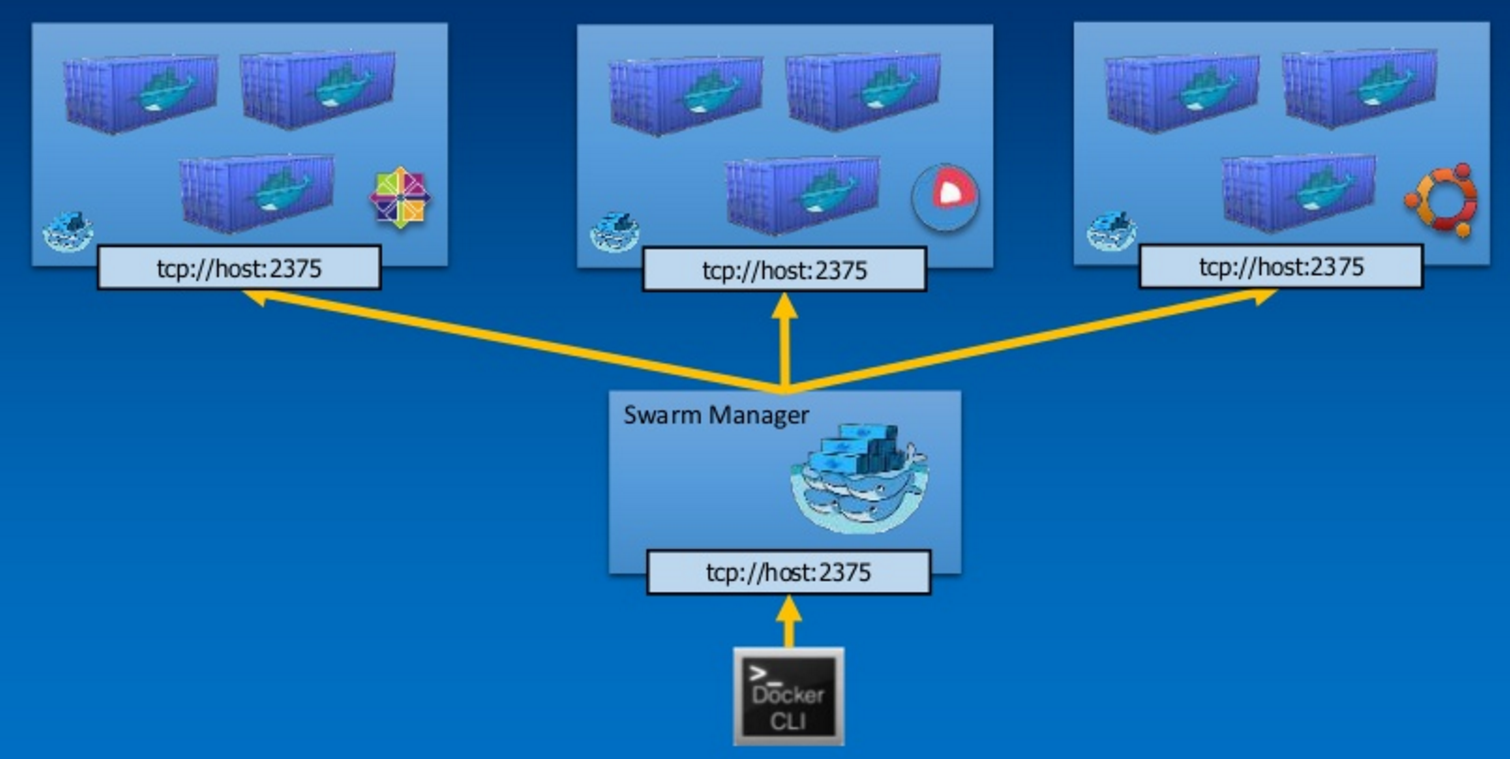

Docker Swarm

Docker comes with a built in clustering management. It turns a group of Docker hosts into a single virtual docker host. It integrates with Discovery services (Consul, Etcd, Zookeeper), has a remoting API (yey!) and have advanced scheduling strategies and filters.

More information on the official documentation

Enough talk. Let's scale our service already!

Scaling our greeter

Ok. So we have first to create a Swarm.

A smarm has a manager (which gets elected) and workers. A leader, like any good leader, is also a worker.

Create a Swarm

To create our Swarm (thus assign the manager also) we type:

docker swarm init

(If you have a host with two or more nics, you need to explicitly tell Docker on which to advertise the service)

docker swarm init --advertise-addr 10.25.50.1

Note: replace the ip figuring out here by the one you have. You can also pass an interface name here such as eth0

docker will reply with a

Swarm initialized: current node (drg0jv8uxl7g5kzvutgfogx6c) is now a manager.

To add a worker to this Swarm, run the following command:

docker swarm join \

--token SWMTKN-1-2j9z7v3yr2rho2nokx7hgix7qpejogshq7amvkmsirlwm6qop2-a3x08wfayv75kk5js2aoz9avz \

10.25.50.1:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

So if you need to add a worker to the Swarm, you'd know what to do. If you add another manager, it would be reachable and can get elected too by the Swarm.

Create the service on the swarm

Let's create a service on our Swarm based on the image we've built previously. (the "Hello, Docker world from hostname.")

docker service create --publish 9000:5000 --name greeters jmkhael/myservice:0.0.1

Here i used

--publishto bind ports external=9000, internal=5000, just like usingdocker run -p 9000:5000

In order to check service, docker service ls is your friend.

docker service ls

ID NAME REPLICAS IMAGE COMMAND

amvl11q8eu4l greeters 1/1 jmkhael/myservice:0.0.1

Test the service

Let's see if the service is running:

curl http://10.25.50.1:9000

Replace the ip with your machine name/ip or localhost...

Hello, Docker world from 5c95a9f48bd3.

Scale the service

Ok, now for the interesting part. If we want to scale our service, we can do so:

docker service scale greeter=2

Now let curl 3 times:

curl http://10.25.50.1:9000, curl http://10.25.50.1:9000 and curl http://10.25.50.1:9000

or for i in {1..3}; do curl http://10.25.50.1:9000/ ; done

Hello, Docker world from 5c95a9f48bd3.

Hello, Docker world from 33470897d0cb.

Hello, Docker world from 5c95a9f48bd3.

we get 2 different hosts, with a a round-robin load balancing between the containers, without changing IP:ports or doing any discovery whatsoever...

That's neat.

A quick benchmark shows:

ab -c 10 -n 1000 http://10.25.50.1:9000/

Requests per second: 7889.50 [#/sec] (mean)

Handling fault Tolerance

Let's kill (or stop) one of our containers which are running the service:

Run docker ps to get one of those and just docker stop one of the containers.

Execute docker service ls after a while and see that the replicas are one less, then get back to normal.

ID NAME REPLICAS IMAGE COMMAND

amvl11q8eu4l greeters 1/2 jmkhael/myservice:0.0.1

then

ID NAME REPLICAS IMAGE COMMAND

amvl11q8eu4l greeters 2/2 jmkhael/myservice:0.0.1

Also, gratos.

And, what if I told you that Docker Swarm runs on the Raspberry Pi, and you can have your dirt cheep Swarm of Pi Zero for 5$ each?

Removing the greeter

When you are done with your service, you can just remove it with:

docker service rm greeters

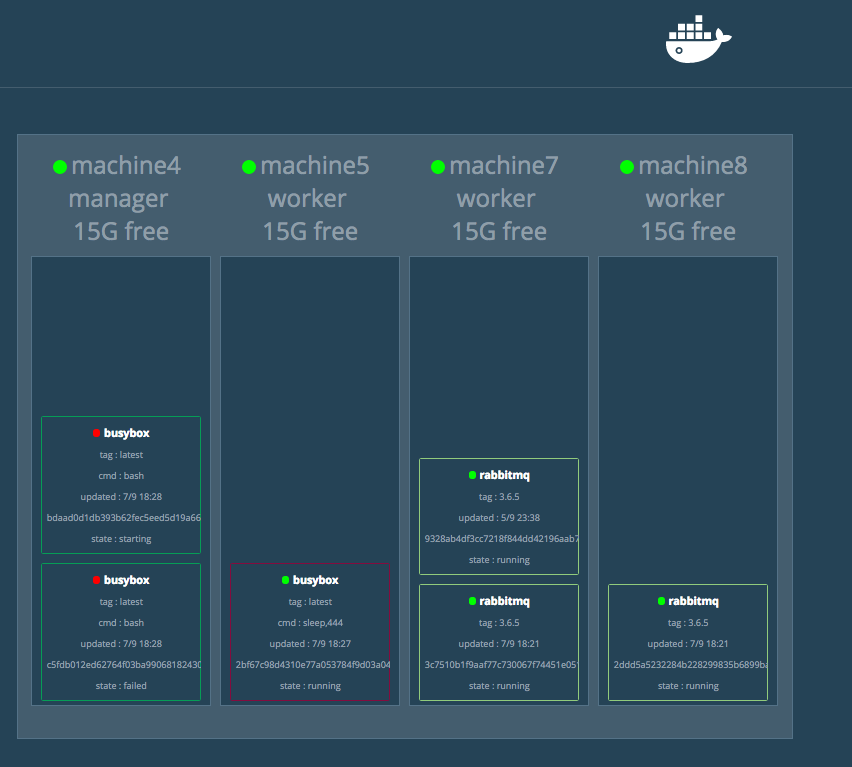

Swarm visualization

A Github project to visualize node in the swarm and the tasks running on each is available.

When a service goes down it'll be removed. When a node goes down it won't, instead the circle at the top will turn red to indicate it went down. Tasks will be removed. Occasionally the Remote API will return incomplete data, for instance the node can be missing a name. The next time info for that node is pulled, the name will update.

Up next

In this post,

- we created Swarm service,

- deployed our greeter service,

- scaled it and

- even touched a bit the high availability feature of Docker Swarm

(But we didn't really benchmark it ;) )

In the next post we will see how to deploy our service on the cloud. i.e. outside our Organization premises.